Shanghai AI Laboratory

Shanghai AI Laboratory

Mesh generation is of great value in various applications involving computer graphics and virtual content, yet designing generative models for meshes is challenging due to their irregular data structure and inconsistent topology of meshes in the same category. In this work, we design a novel sparse latent point diffusion model for mesh generation. Our key insight is to regard point clouds as an intermediate representation of meshes, and model the distribution of point clouds instead. While meshes can be generated from point clouds via techniques like Shape as Points (SAP), the challenges of directly generating meshes can be effectively avoided. To boost the efficiency and controlability of our mesh generation method, we propose to further encode point clouds to a set of sparse latent points with point-wise semantic meaningful features, where two DDPMs are trained in the space of sparse latent points to respectively model the distribution of the latent point positions and features at these latent points. We find that sampling in this latent space is faster than directly sampling dense point clouds. Moreover, the sparse latent points also enable us to explicitly control both the overall structures and local details of the generated meshes. Extensive experiments are conducted on the ShapeNet dataset, where our proposed sparse latent point diffusion model achieves superior performance in terms of generation quality and controlability when compared to existing methods.

SLIDE: Controllable Mesh Generation Through Sparse Latent Point Diffusion Models

Zhaoyang Lyu*, Jinyi Wang*, Yuwei An, Ya Zhang, Dahua Lin, Bo Dai

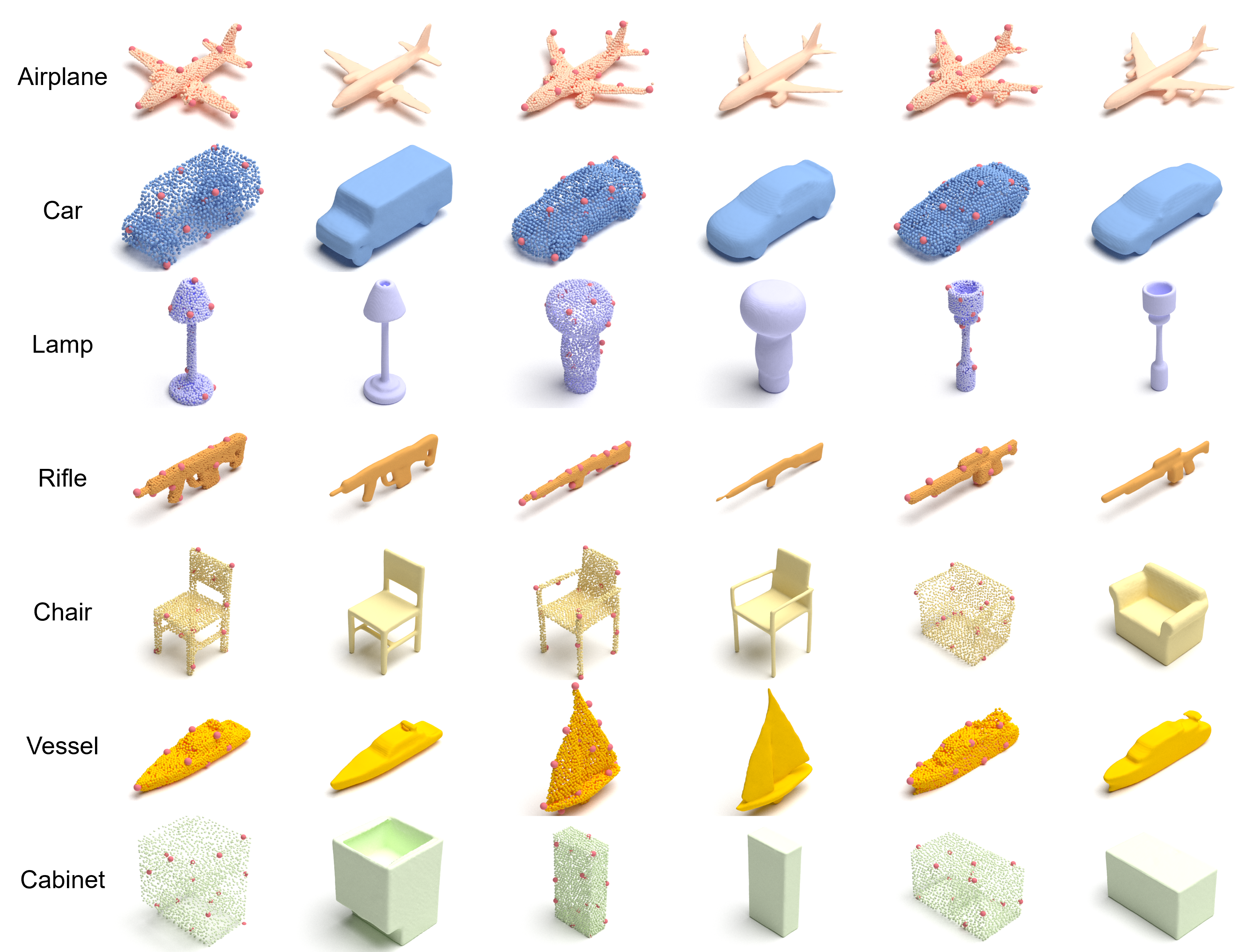

Meshes and corresponding points and sparse latent points generated by SLIDE with different shapes.

Through moving the two points of chair legs to control the position of chair legs.

Give two lamps, we get the interpolation results of two lamps by interpolating the sparse latent points.

Give two lamps, we only interpolate the upper part of two lamps by interpolating the sparse latent points.

Combining the sparse latent points of two lamps to generate a new lamp.

Move the two points of chair legs to control the position of chair legs. Orange chairs represent the results of fixing the rest of the mesh and green chairs represent the results of global change.

More examples of SLIDE generation results.

@misc{https://doi.org/10.48550/arxiv.2303.07938,

doi = {10.48550/ARXIV.2303.07938},

url = {https://arxiv.org/abs/2303.07938},

author = {Lyu, Zhaoyang and Wang, Jinyi and An, Yuwei and Zhang, Ya and Lin, Dahua and Dai, Bo},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Controllable Mesh Generation Through Sparse Latent Point Diffusion Models},

publisher = {arXiv},

year = {2023},

copyright = {Creative Commons Attribution 4.0 International}

}